What Is A/B Testing and How To Use It for Your Nonprofit's Success

You have an idea of the message you want to send out about your next campaign, but don’t know if adding a picture or video would make it better or...

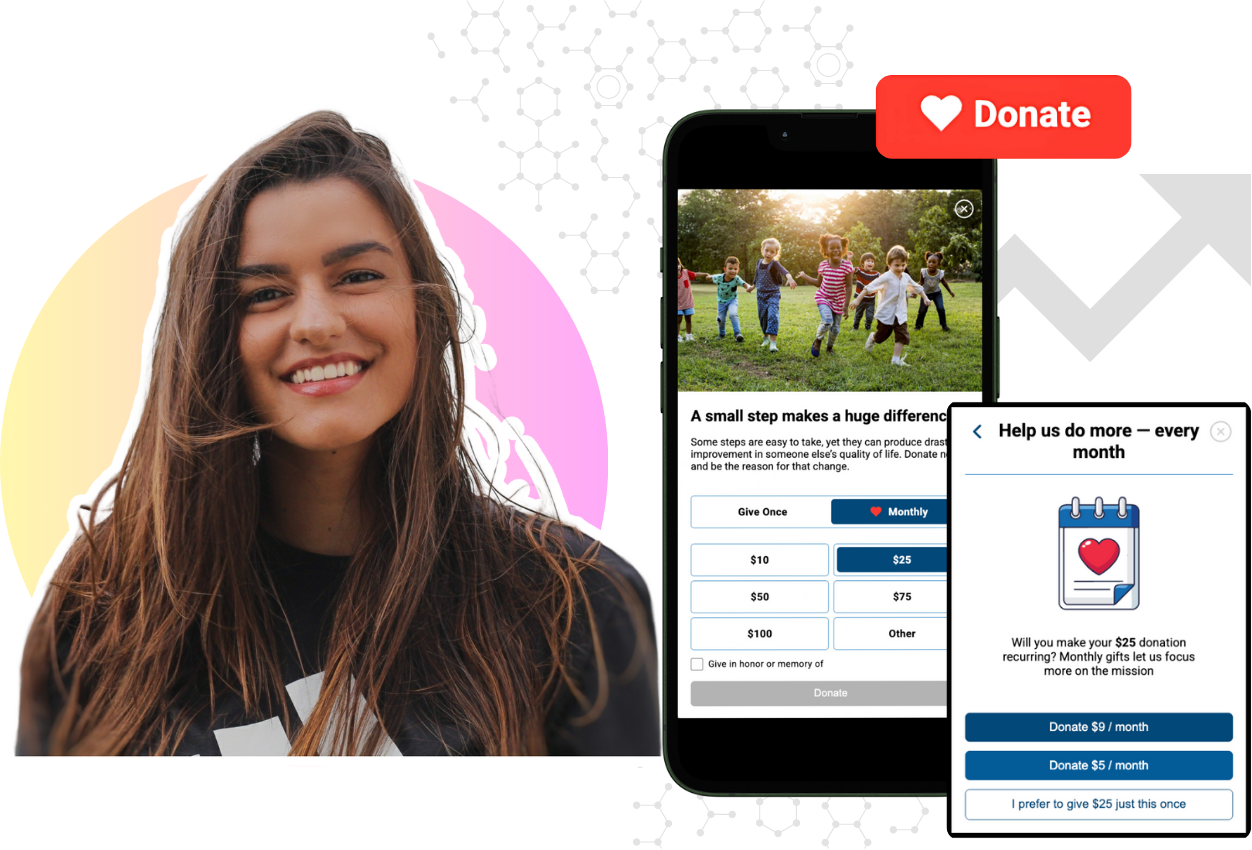

Mobile-First Pop-Up Donation Form

Launch mobile-first pop-up forms in minutes, use built-in tools to capture more donations, and optimize the giving experience—no dev team required.

New to online donation pages for your nonprofit? Start here.

Donation page A/B testing - no science degree needed.

Keep your donation page loading fast - and drive higher conversions.

The 4 Types of Online Donation Experiences

89% of donors leave without giving. Learn how to use the right donation form to close the gap and boost conversions.

In simple terms, A/B testing is like a friendly competition where two versions of your donation page go head-to-head to see which one performs better.

For nonprofits, this means experimenting with different headlines, images, and call-to-action buttons to discover what truly resonates with your supporters and donors.

The beauty of A/B testing lies in its ability to minimize the risks of changes that could backfire, ensuring every tweak is data-driven and impactful.

Ready to dive into the world of testing? Let’s explore the ins and outs, from the basics to the metrics that matter most.

A/B testing is a method of comparing two versions of a webpage or app against each other to determine which one performs better.

For nonprofits, running A/B tests on donation pages and forms is crucial for sustained long-term digital fundraising growth.

It helps identify the most effective design and messaging that maximize conversions (and donations).

By experimenting with different elements such as headlines, images, and call-to-action buttons, nonprofits can optimize their donation forms and pages to increase conversion rates, ultimately leading to more donations and greater support for their missions.

Before we start mixing testing equations, let’s cover a few key terms that we will see pop up often.

By default, your original donation page will be considered the control for the A/B test. This provides us with a baseline for current performance and a set of statistics that act as a baseline for performance expectations.

And, as all champions do at some point, they are bound to fall at some point to the challenger.

When launching a new donation page A/B test, the variant that is created to test a hypothesis is typically referred to as the challenger variant (or treatment).

This provides a path to easily track results and, if it outperforms the champion/control, provides an easy path to promote the treatment as the new champion.

Statistical relevance (or significance) refers to the likelihood that a result or relationship observed in data is not due to chance.

In the context of testing nonprofit donation pages, it means evaluating changes (like changes to a gift array) to see if they genuinely improve donation conversion percentage or if the observed effect might just be random hiccup in results.

Remember, there will always be some margin of statistical error with any test, so results are best viewed and used as a guide to a higher performing donation page.

Yes, testing is a good way of seeing if your wild donation page ideas *might* actually work.

But, you should really be thinking about using A/B testing as a way to learn more about your donors and what they want.

With key learnings from A/ B tests gathered over time, you’re optimizing your donation pages to more donor-centric while maximizing sustained growth.

From an operational standpoint, there’s 3 primary reasons why your new anthem should be “test it out 🧪”.

Yep, it’s an obvious pick here... but conversions are the primary purpose of a donation page so we’re going with it.

Through A/B testing, nonprofits can experiment with various elements on their donation pages to achieve higher conversion rates while tracking and understanding the changes that led to positive (or sometimes negative) outcomes.

After all, if you converted 1% more website traffic each month, how would that impact your mission?

Why aim for higher conversion rates on your donation pages?

To maximize the impact of every website visitor.

Whether you're already running fundraising campaigns or plan to start, increasing conversions is crucial. Each donation is a step in a larger donor journey, leading to greater engagement and support.

For instance, if you're investing $10,000 in a PPC campaign to drive traffic to your donation page, why not optimize that page through testing to ensure you're getting the most out of your investment?

Ultimately, your nonprofit’s goals are tied to the actual funds raised through these conversions.

Higher conversion rates = more donations = greater impact.

We’ve all had that sinking feeling at one point or another.

You have a vision for what your donation page could look like, and you’re incredibly confident that conversions and donations will skyrocket once you implement the changes.

You're so sure that you jump straight into the editor, make the changes, and go live.

…and then you check your donation page/campaign dashboard the following week…

Your conversion rate dropped from 4% to just 1%.

Ooof.

Instead of making drastic changes with your fingers crossed, you can significantly reduce the risk by running a simple split test first.

Best case scenario, your new version outperforms the old one. But by de-risking the change, you can quickly revert if things don’t go as planned.

Many nonprofit organizations base their decisions on a mix of experience, intuition, and personal opinion when determining what might resonate best with donors.

While this approach occasionally succeeds, it often falls short.

When you begin A/B testing, it’s essential to set aside assumptions and focus on data-driven insights: properly interpreted data doesn’t lie.

There are numerous elements you can experiment with during your testing. While you have control over the variations and content, the ultimate decision of what works best lies with your donors.

Using iDonate, consider split testing these elements on your donation page:

It’s statistically proven that including a value proposition, a section where you why someone should give and how donations impact mission progress, as the first section of your donation page elevates donor conversion by as much as 150%.

Testing value proposition content, length, and layout are all easy to accomplish and can be one of the clearest paths to success – especially if you are moving from no value prop copy to a well thought out section.

Nonprofit of all shapes and sizes are working through how to launch (or grow) their recurring giving programs.

It makes sense, as monthly giving can provide nonprofits with a reliable fundraising revenue stream.

Testing something as simple as adding a tabbed option that gives your donor the option to choose how they want to support your mission is a great thing to start testing.

Also worth testing? Having the monthly giving option auto-selected.

When it comes to gift arrays, there’s so many different combinations and values that can be use that it would be near impossible to land on the perfect combination for your donation page on the first shot.

Further, what appears to be working now may not work as well 6 months from now.

Ever wondered if 4 donation options performs better than 6 donation options on your gift array?

Test it out.

Same thing goes for finding the sweet spot for your donation page when it comes to the values you provide in your gift array.

Tripling down on this, you can even test if having your gift array shown in a ascending value order is more impactful than descending value order.

Helping potential donors build downward momentum on your donation form is key to maximizing conversions.

The key here is to make sure you only have the absolute minimum fields that are required to complete a donation – but just in case you absolutely feel that you need to have a subscription bit-box included in this section, there’s an easy way to see if it’s really hurting conversion rates...

Test it out.

Wondering if removing an option to process ACH in your donation form will decrease overall conversions + donation dollars?

Test it out.

Are you seeing that potential donors are making it all the way to payment information section of your donation form and then bailing?

One of the easiest ways to combat this is to test the impact of adding copy around processing security and adding a padlock icon.

Ever wonder if the tried and true “check-out upsell” strategies from e-commerce sites would work to convert one-time donors to monthly supporters?

Sounds like a job for A/B testing.

Remember, though – this might lead to a decrease in metrics like average donation size, but the trade-off is that you are building your monthly giving program, where the retention rate hovers in the 70%-90% range.

Ok, we’ve got the basics of A/B testing down.

Next up: planning to launch your first A/B test.

For this step-by-step process, we’re going to tag in our favorite testing partners, NextAfter, to outline how you should approach planning and executing your first donation page A/B test.

(Psst. What we’re about to cover is a high-level overview of setting up your A/B test. If you want to get a full deep dive into this topic, check out NextAfter’s A/B testing guide for online fundraising.)

Start your A/B test by defining clear goals based on data and observations.

Effective goals come from fundraising benchmarks, donation analytics, donor feedback, and market research.

Even gut instincts can be worth testing.

Accurate tracking is crucial for an effective A/B test — you can’t optimize what you can’t measure.

Ensure you have three layers of tracking in place: traffic, conversions, and revenue.

This step can be tricky, but by using iDonate, you can track and optimize your donation page performance based on these 3 core measurements.

You’ve got your goal in mind and you’ve established that it’s measurable.

It’s time to draft your hypothesis.

Yes, it may feel like we’re going back to high school chemistry class, but trust us – this step is crucial when assessing if the proposed test is a success, a failure, or led to completely unexpected insights all together.

NextAfter provides an easy-to-use template for drafting your hypothesis:

Because we saw (data/feedback), we expect that (change) will cause (impact). We will measure this using (metric).

Breaking this down, here’s what each key input means:

And finally, a real-life example of a donation page hypothesis:

Because we saw low conversion rates, we expect that adding more value proposition copy will cause greater donor response. We will measure this using total donations.

Setting your sample size will be critical to ensuring your test reaches a statistically relevant outcome.

Cutting your experiment short could lead to inconclusive or incomplete results based more on random chance than true optimization.

With iDonate, you can set and track sample sizes for either traffic, donations, or specific frame of time.

How do you arrive at your ideal sample size for your experiment? Here’s a few resource that you can use to get a good read on your experiment's sample size:

Thankfully, ½ of the work is already done with your donation form champion variant already being in play.

From here, using iDonate’s A/B testing module, you can quickly create the challenger variant that supports the hypothesis created in step 3.

Further, you can use NextAfter’s checklist to make sure your variant supports your hypothesis before launching:

Since iDonate has a built-in donation form A/B testing tool with these steps built into its native workflow, all that’s left is to smash the publish button and track results.

[insert screen cap]

If you are working with a 3rd party tool or testing plugin, there may be extra steps to launch a successful A/B test – NextAfter provides a handy checklist to make sure that you’ve got all the testing bases covered:

No matter how hard it might be, let the data lead the outcomes and next steps once your test has hit statistical relevance.

In case you are wondering when to shut down your test, here’s a few key guidelines that NextAfter provides:

If you are using iDonate’s A/B testing tool, you’re able to easily promote the winning variant based on statistical outcomes.

In addition to this, all tests are stored for historical review and sharing out to your team.

You’ve launched the winning variant, but the job isn’t done here.

The key to prolonged, sustainable digital fundraising growth relies on your team’s dedication to continual A/B testing.

Test your value proposition.

Test your gift arrays.

Test your donation form.

Test your security form copy.

Test your CTAs.

Keep going. It may seem daunting and tedious, but keep this in mind: what would your nonprofit look like if you increased conversion rates by 1% every month for a year?

Or you see a 10% increase in average gift size every 6 months?

You won’t see those positive changes if you don’t commit to making testing and optimization a mindset.

When setting up testing metrics, it’s key to remember that most nonprofits will only see enough traffic to validate testing based on donation-related numbers.

With that in mind, we’ve outlined a handful of key donation-focused metrics that nonprofits of any shape and size can use to launch (or refine) their A/B tests:

After your test has been active for a while, it’s time to don your lab coat and start analyzing.

The duration of your A/B test depends on various factors, so the timing for diving into the data will vary case by case.

Once you start evaluating your test, here’s a simple framework to follow:

First, analyze how your primary metric is trending.

Assess your progress toward any target set during the planning phase. Did you hit the target? Are you close but slightly behind? Or are you miles away, moving in the wrong direction?

At this stage, focus on the top-line data. We’ll delve deeper next.

Even though you’re optimizing for one metric, dozens of others may be impacted. Before declaring your A/B test a winner or loser based solely on the primary metric, spend time exploring other related metrics like time on page, lead quality, and donation completion rates.

Ask yourself a few high-level questions:

After digging into the data, if your test results are statistically significant, you should be ready to “call” your hypothesis.

You have two options:

This might be the most important step of all. Before closing the book on an A/B test, come up with 5-10 new ideas or questions based on what you learned.

These ideas don’t need to be perfect or even make complete sense yet. What matters is continually “feeding the machine” of your A/B testing engine. If you generate five new ideas at the end of each test, your idea bank will grow quickly.

Important note: You don’t need to launch every idea. Even if you implement only 10%, you’ll never be stuck staring at a blank “create a new variant” screen again.

Always be testing.

You know, everyone makes mistakes - but please, learn from some of the A/B testing lessons we've had to figure out the hard way.

There’s a good amount of A/B testing tools on the market today and you can find a pretty extensive list of options here.

Here’s the thing, though: None of these options were built specifically for nonprofits, let alone built directly into an online donation platform.

So, we went looking for a tool that gives nonprofits the best of both worlds and, to no surprise, it’s iDonate.

We’ve spent the last handful of years listening to nonprofits and working with great organizations like NextAfter to create the best (and only) in-platform donation page A/B testing tool that you can get.

Here’s the cliff-notes of what you get with iDonate’s A/B testing tool:

Remember when we discussed how to figure out your A/B tests ideal sample size?

Time to get to it.

The sample size calculator will go a long way to figuring out how long your A/B test should run for your donation forms and pages.

Remember: It depends on many factors, so there's no universal answer. With enough traffic and a big impact, you might get results in days.

You totally can run more than 1 donation form/page test at a time.

Keep in mind, though, that depending on the type of tests you are trying to run at the same time, it could be ideal to combo-break your tests in succession. This would potentially allow you to build positive momentum and continue to build on succesfull tests consecutively.

For nonprofits optimizing their donation forms and pages, aim for an A/B test success rate between 20-30%. Achieving this balance is crucial, as it's more nuanced than exact science.

If your success rate exceeds 50%, it indicates that your original pages may lack several best practices and can be significantly improved without extensive A/B testing.

Conversely, a success rate below 10% suggests that your targets might be too ambitious or that your tests are too specific (e.g., changing just one word in a subheading).

Our fav lab partners, NextAfter, have a whole page dedicated to outlining the experiments and tests that they run with the nonprofits they work with.

They also provide numerous other ways to level up your testing skills - from blogs, ebooks, courses, and certifications.

The best time to start testing?

There’s no time like the present (aka today).

Testing can be (and should be) easy to start and, with the right tools (ahem, iDonate), it’s a smooth, repeatable process that only has upside.

Here’s the best way to get started:

The testing is in your hands now and we’re always down to be your lab partner.

You have an idea of the message you want to send out about your next campaign, but don’t know if adding a picture or video would make it better or...

I’ve been hearing versions of this quote a lot lately:

Optimizing your donation pages is equally important as optimizing the marketing and communications activities that lead people there. Your...